Debugging Linkerd for mislabelled route metrics

This post is cross-posted from the Attest Product & Technology medium publication.

At Attest we use Linkerd. Linkerd is a service mesh, it has features that help add observability and reliability to your Kubernetes clusters.

This is a story about debugging Linkerd, to find out why our metrics were being misrecorded.

One part of Linkerd (linkerd-proxy) runs as a sidecar,

this sidecar is attached to your deployment’s pods and the traffic going in and out of the pod passes through linkerd-proxy.

Linkerd can now see the URL paths (endpoints) that your service is being called on,

and can record Prometheus metrics on those endpoints.

Linkerd metrics and routes

Linkerd, through linkerd-proxy sidecar can record metrics on your service’s endpoints.

Some of your endpoints will have IDs in their URLs, for example:

/api/org/86325e01-cc8c-4603–9b31-b5117aa0f3cd/members

Linkerd records the URL into an rt_route label on range of Prometheus metrics.

If linkerd-proxy recorded the URL directly into the metric as a label,

it would create a huge number of different labels for essentially the same endpoint.

That would be bad for your metrics, and bad for your Prometheus server.

To fix the problem of IDs in the URL,

Linkerd allows you to configure a custom Kubernetes resources called a ServiceProfile.

These ServiceProfiles allow you to specify the routes that your service can be called on,

and provide a regex pattern to match that route. This means we can record our rt_route label as:

/api/org/{org_id}/members

Here’s an example:

| |

This ServiceProfile would use the pathRegex field to match the incoming requests,

and set the label rt_route="GET /api/org/{org_id}/members" in the metrics recorded.

It would produce a metric like:

request_total{rt_route="GET /api/org/{org_id}/members"} 1

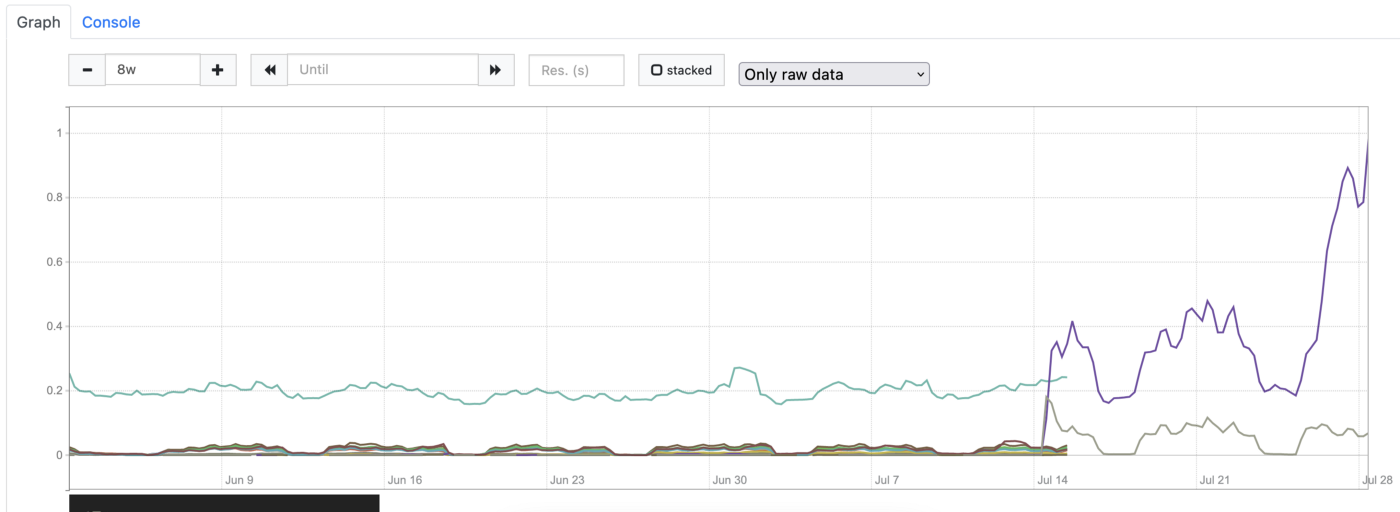

Our metrics started to look weird

We started to see increase rates of requests calling an endpoint that was for an unreleased feature. This was weird, because no one should be using that endpoint yet.

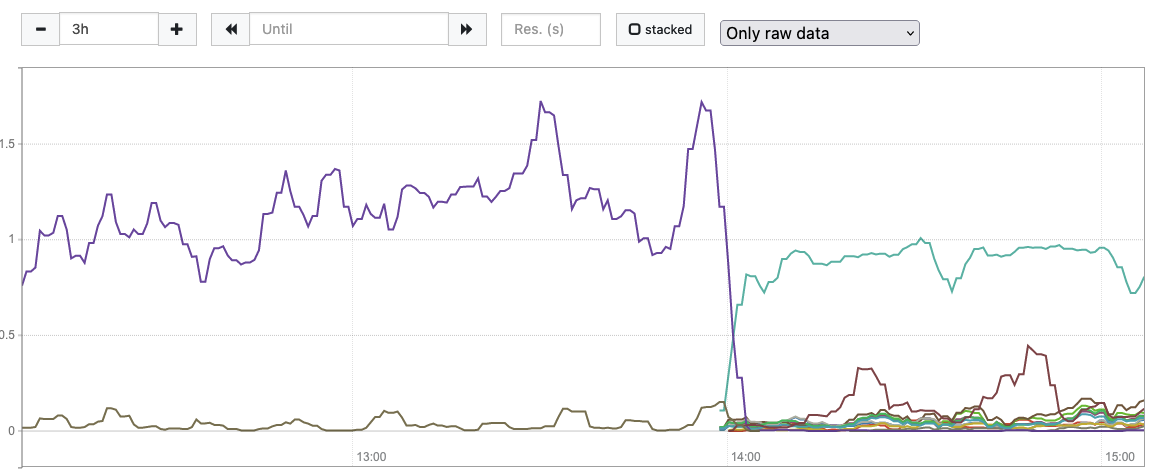

We also saw a bunch of our metrics disappear. 😱

We looked at our current metrics, and saw a few problems:

- Some metrics were not being recorded at all. The lines just stop on the chart.

- The rates for some metrics had shot up at about the same time the other metrics stopped.

The two endpoints that were remaining and returning a large rate were:

GET /api/v2/study/{id}/resultsPOST /api/study/{id}/generated

Debugging using tap

Linkerd has a really strong feature called tap.

Tap, or linkerd viz tap using the linkerd CLI tool,

lets you see the requests and responses passing through the linkerd-proxy pods.

It has an output like:

| |

From the output of tap we can see both the raw path under :path and the rt_route that’s been matched to that path.

We started to see tap output like this:

| |

:path, and the assigned rt_route were completely different. Weird!At least we now knew why the rates for these unused endpoints were shooting up.

A number of paths were being recorded under this rt_route when their regex didn’t actually match.

By whyyyy though?

When debugging something really unknown like this, I like to create a list of all the things that I can imagine breaking. My list looked something like:

- Bad regex in the ServiceProfile.

- Bad parsing of the ServiceProfile by the proxy, or other inter-service communication weirdness.

- Incorrect assignment of

rt_routebylinkerd-proxy, a bug in the proxy sidecar. - Something temporal, a previous

rt_routebeing kept for later requests, and reused as thert_route.

It’s easy and natural to assume that an issue like this is caused by a bug or problem in the opensource project. I scanned the issue list, and nothing jumped out. So I suppressed all my urges to assume it wasn’t our fault, and cracked on investigating our config and code.

More tapping

Using ~5mins of tap data from two different services, parsing it into a CSV, and building a Pivot Table on the data in a Google sheet I could see:

- Routes were consistently mis-labelled. For a given route

A, it was always consistently labelled asrt_route=B. There were about 14 different routes affected, and they were all consistently receiving the same incorrectrt_routelabel. - Only one service was affected. We could narrow down the problem. If we could see mislabelled routes in multiple services then we’d know it was more likely a problem with the proxy, and not a problem with our service/config. But; this only affected one service. Which suggests it’s something to do with this service. The config became the primary suspect.

Test the regexs

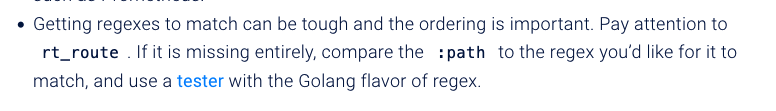

In the troubleshooting doc from Linkerd I found this segment (at the time of writing). It explains that the regex should match the Golang dialect.

The important bit says:

Compare the

:pathto the regex you’d like to match, and use a tester with the Golang flavour of regex.

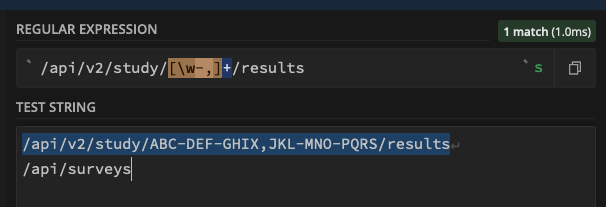

Using regex 101, I tested each of our pathRegex fields from the ServiceProfile,

and found that there’s no way that /api/surveys should match the regex for the route GET /api/v2/study/{id}/results.

You can try it yourself, here is the regex:

/api/v2/study/[\w-,]+/results

To test some parts of our product, we allow the endpoints to be called with a CSV of IDs,

like /api/v2/study/ABC-DEF-GHIX,JKL-MNO-PQRS/results.

So we need to match the word characters, the hyphens, and the commas in our regex.

Regex 101 confirmed our regex was working:

Time to build a timeline

All the details we know surrounding the problem suggested it was a problem with config, but regex 101 was reporting that the config should work. We knew it affected only one service, suggesting it’s not a proxy problem. We knew the routes were labelled incorrectly but always incorrectly in the same way, which rules out a kind of temporal problem. But we could verify the config was correct, based on the flavor of regex reported in the Linkerd troubleshooting doc.

The only thing left to do was build a timeline. In general, stuff doesn’t just break on its own. It normally breaks as a result of a change. Building a timeline can help you narrow down when the change happened, and what the change was. We could see from the metrics when the problem started to happen. So, we built a timeline of;

- Config changes leading up to and after the start of the issue.

- Deployments, and releases before and after the start of the issue.

- Changes to the

linkerd-proxyversion and infrastructure before and after the issue.

We found just one change in the lead up to the problem.

It was a change to the ServiceProfile pathRegex, and it touched the routes affected.

| |

We added a comma to our regex, to make sure we matched the testing CSV calls we might make to results.

The problem must be caused by this change

Nothing else changed in the run-up to the problem. The single change that we did make was for the exact path that was breaking. Everything pointed to that regex as the problem. But, we’d already used regex 101 to rule out problems with the regex.

It’s now time to check our assumptions, we’ve exhausted the set of good or obvious ideas. And I was increasingly convinced it must be the change to the regex.

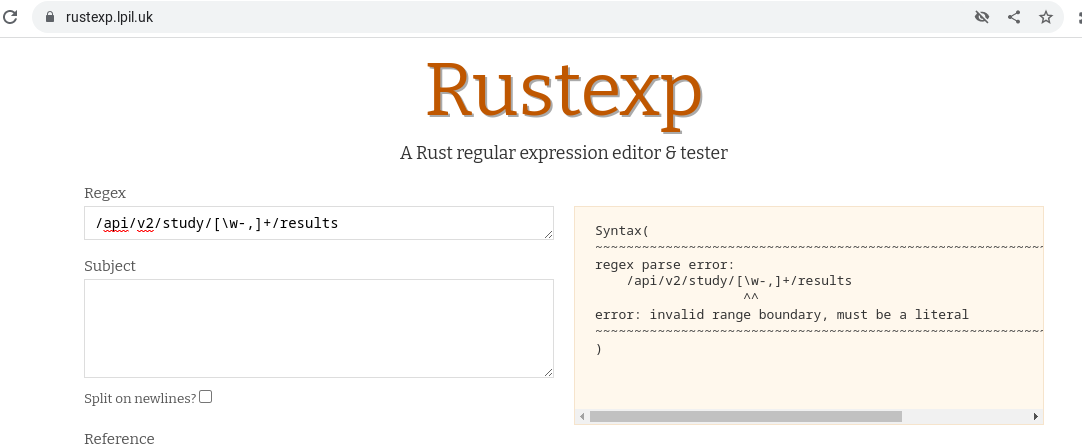

The docs say that the pathRegex field uses the Golang flavour of regex.

The linkerd-proxy is written in Rust. Suspicious.

Uh-oh!

- In Golang regex flavour, this is a class containing all “word chars” (

\w), the literal-and the literal,. The hyphen is not considered a request for a range of chars, because it doesn’t make sense to open a range with a character class. - In Rust, this is an invalid regex, because it doesn’t make sense to open a range with a character class.

This invalid regex was leaving several routes in our ServiceProfile without valid regexes, which made them eligible to match anything rather than nothing. Then, when you have overlapping matching rules, ordering within the file becomes important… leading to the consistent mislabelling of the routes.

Fixed!

We reordered the regex to have the hyphen as the first character, so it doesn’t act as a range.

- The fixed part of our regex:

[-\w,]+

We rolled out the fix, and could verify pretty quickly that it was working. Our routes were being correctly labelled again, and our metrics returned to normal. Phew!

We’ve also opened an issue with Linkerd to help get the docs fixed. The troubleshooting docs suggest that the Golang flavour of regex is used by the proxy, but this is wrong.

Closing thoughts

Let this go down in the unwritten style guide of regexes:

[], and in no other pofithion, lest thine regex up and devour thou favoured hound.Or in simpler terms;

- first in the [...]. Putting it last is correct and legal, but someone might add something after it. And then who knows what happens!Linkerd is a brilliant tool, and the linkerd viz tap command is really powerful.

The blame lies somewhere between the tool (with different flavours of regex), and us (not writing safe regex patterns).

But without the DevEx and debugging tools built into Linkerd, it would have been much harder to debug the issue.